Cluster Verification Utilit(CVU)是Oracle所推荐的一种集群检验工具。该检验工具帮助用户在Cluter部署的各个阶段验证集群的重要组件,这些阶段包括硬件搭建、Clusterware的安装、RDBMS的安装、存储等等。我们既可以在Cluster安装之前使用CVU来帮我们检验所配置的环境正确可用,也可以在软件安装完成后使用CVU来做对集群的验收。

CVU提供了一种可扩展的框架,其所实施的常规检验活动是独立于具体的平台,并且向存储和网络的检验提供了厂商接口(Vendor Interface)。

CVU工具不依赖于其他Oracle软件,仅使用命令cluvfy,如cluvfy stage -pre crsinst -n vrh1,vrh2。

cluvfy的部署十分简单,在本地节点安装后,该工具在运行过程中会自动部署到远程主机上。具体的自动部署流程如下:

- 用户在本地节点安装CVU

- 用户针对多个节点实施Verify检验命令

- CVU工具将拷贝自身必要的文件到远程节点

- CVU会在所有节点执行检验任务并生成报告

CVU工具可以为我们提供以下功能:

- 验证Cluster集群是否规范配置以便后续的RAC安装、配置和操作顺利

- 全类型的验证

- 非破坏性的验证

- 提供了易于使用的接口

- 支持各种平台和配置的RAC,明确完善的统一行为方式

注意不要误解cluvfy的作用,它仅仅是一个检验者,而不负责实际的配置或修复工作:

- cluvfy不支持任何类型的cluster或RAC操作

- 在检验到问题或失败后,cluvfy不会采取任何修正行为

- cluvfy不是性能调优或监控工具

- cluvfy不会尝试帮助你验证RAC数据库的内部结构

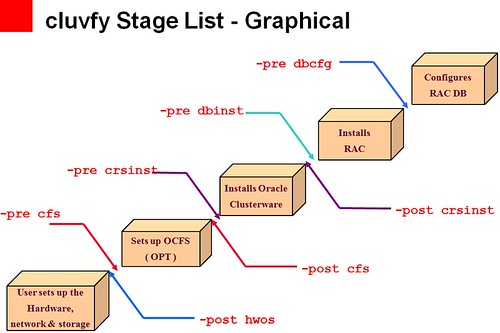

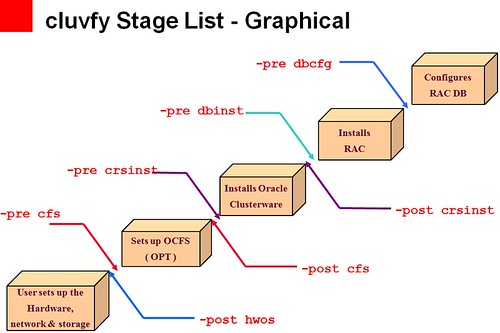

RAC的实际部署可以被逻辑地区分为多个操作阶段,这些阶段被称作是"stage",在实际的部署过程中每一个stage由一系列的操作组成。每一个stage的都有自身的预检查(pre-check)和验收检查(post-check),如图:

我们会在CRS和RAC数据库的安装过程中具体使用Cluster Verify Utility的不同"stage",各种不同的stage可以使用cluvfy stage -list命令列出:

cluvfy stage -list

USAGE:

cluvfy stage {-pre|-post} <stage-name> <stage-specific options> [-verbose]

Valid Stages are:

-pre cfs : pre-check for CFS setup

-pre crsinst : pre-check for CRS installation

-pre acfscfg : pre-check for ACFS Configuration.

-pre dbinst : pre-check for database installation

-pre dbcfg : pre-check for database configuration

-pre hacfg : pre-check for HA configuration

-pre nodeadd : pre-check for node addition.

-post hwos : post-check for hardware and operating system

-post cfs : post-check for CFS setup

-post crsinst : post-check for CRS installation

-post acfscfg : post-check for ACFS Configuration.

-post hacfg : post-check for HA configuration

-post nodeadd : post-check for node addition.

-post nodedel : post-check for node deletion.

在RAC Cluster中独立的子系统或者模块被称作组件(component),集群组件的可用性、完整性、稳定性以及其他一些表现均可以使用CVU来验证。简单如某个存储设备、复杂如包含CRSD、EVMD、CSSD、OCR等多个子组件的CRS stack都可以被认为是一个组件。

在CRS运行过程中为了检验Cluster中的某个特定组件(component)或者为了独立诊断某个Cluster集群子系统,需要用到合适的组件检查命令;各种不同组件的检验可以使用cluvfy comp -list命令列出:

cluvfy comp -list

USAGE:

cluvfy comp [-verbose]

Valid Components are:

nodereach : checks reachability between nodes

nodecon : checks node connectivity

cfs : checks CFS integrity

ssa : checks shared storage accessibility

space : checks space availability

sys : checks minimum system requirements

clu : checks cluster integrity

clumgr : checks cluster manager integrity

ocr : checks OCR integrity

olr : checks OLR integrity

ha : checks HA integrity

crs : checks CRS integrity

nodeapp : checks node applications existence

admprv : checks administrative privileges

peer : compares properties with peers

software : checks software distribution

acfs : checks ACFS integrity

asm : checks ASM integrity

gpnp : checks GPnP integrity

gns : checks GNS integrity

scan : checks SCAN configuration

ohasd : checks OHASD integrity

clocksync : checks Clock Synchronization

vdisk : checks Voting Disk configuration and UDEV settings

dhcp : Checks DHCP configuration

dns : Checks DNS configuration

我们

可以从OTN的CVU专栏内下载到最新版本的cluvfy,如果没有特殊的要求那么我们总是推荐使用最新版本。一般在完成RAC安装后也可以从以下2个路径找到cluvfy:

Clusterware Home

<crs_home>/bin/cluvfy

Oracle Home

$ORACLE_HOME/bin/cluvfy

使用最为频繁的几个cluvfy命令如下:

Verify the hardware and operating system:检验操作系统和硬件的配置

cluvfy stage -post hwos -n vrh1,vrh2

cluvfy stage -post hwos -n vrh1,vrh2

Performing post-checks for hardware and operating system setup

Checking node reachability...

Node reachability check passed from node "vrh1"

Checking user equivalence...

User equivalence check passed for user "grid"

Checking node connectivity...

Checking hosts config file...

Verification of the hosts config file successful

Node connectivity passed for subnet "192.168.1.0" with node(s) vrh2,vrh1

TCP connectivity check passed for subnet "192.168.1.0"

Node connectivity passed for subnet "192.168.2.0" with node(s) vrh2,vrh1

TCP connectivity check passed for subnet "192.168.2.0"

Node connectivity passed for subnet "169.254.0.0" with node(s) vrh2,vrh1

TCP connectivity check passed for subnet "169.254.0.0"

Interfaces found on subnet "192.168.1.0" that are likely candidates for VIP are:

vrh2 eth0:192.168.1.163 eth0:192.168.1.164 eth0:192.168.1.166

vrh1 eth0:192.168.1.161 eth0:192.168.1.190 eth0:192.168.1.162

Interfaces found on subnet "169.254.0.0" that are likely candidates for VIP are:

vrh2 eth1:169.254.8.92

vrh1 eth1:169.254.175.195

Interfaces found on subnet "192.168.2.0" that are likely candidates for a private interconnect are:

vrh2 eth1:192.168.2.19

vrh1 eth1:192.168.2.18

Node connectivity check passed

Check for multiple users with UID value 0 passed

Time zone consistency check passed

Checking shared storage accessibility...

Disk Sharing Nodes (2 in count)

------------------------------------ ------------------------

/dev/sdb vrh2 vrh1

Disk Sharing Nodes (2 in count)

------------------------------------ ------------------------

/dev/sdc vrh2 vrh1

Disk Sharing Nodes (2 in count)

------------------------------------ ------------------------

/dev/sdd vrh2 vrh1

Disk Sharing Nodes (2 in count)

------------------------------------ ------------------------

/dev/sde vrh2 vrh1

Disk Sharing Nodes (2 in count)

------------------------------------ ------------------------

/dev/sdf vrh2 vrh1

Disk Sharing Nodes (2 in count)

------------------------------------ ------------------------

/dev/sdg vrh2 vrh1

Disk Sharing Nodes (2 in count)

------------------------------------ ------------------------

/dev/sdh vrh2 vrh1

Disk Sharing Nodes (2 in count)

------------------------------------ ------------------------

/dev/sdi vrh2 vrh1

Disk Sharing Nodes (2 in count)

------------------------------------ ------------------------

/dev/sdj vrh2 vrh1

Disk Sharing Nodes (2 in count)

------------------------------------ ------------------------

/dev/sdk vrh2 vrh1

Disk Sharing Nodes (2 in count)

------------------------------------ ------------------------

/dev/sdl vrh2 vrh1

Disk Sharing Nodes (2 in count)

------------------------------------ ------------------------

/dev/sdm vrh2 vrh1

Disk Sharing Nodes (2 in count)

------------------------------------ ------------------------

/dev/sdn vrh2 vrh1

Disk Sharing Nodes (2 in count)

------------------------------------ ------------------------

/dev/sdo vrh2 vrh1

Disk Sharing Nodes (2 in count)

------------------------------------ ------------------------

/dev/sdp vrh2 vrh1

Disk Sharing Nodes (2 in count)

------------------------------------ ------------------------

/dev/sdq vrh2 vrh1

Disk Sharing Nodes (2 in count)

------------------------------------ ------------------------

/dev/sdr vrh2 vrh1

Disk Sharing Nodes (2 in count)

------------------------------------ ------------------------

/dev/sds vrh2 vrh1

Disk Sharing Nodes (2 in count)

------------------------------------ ------------------------

/dev/sdt vrh2 vrh1

Shared storage check was successful on nodes "vrh2,vrh1"

Post-check for hardware and operating system setup was successful.

Cluster Installation Ready check on all nodes:安装Clusterware前执行以下命令

cluvfy stage -pre crsinst -n vrh1,vrh2

cluvfy stage -pre crsinst -n vrh1,vrh2

Performing pre-checks for cluster services setup

Checking node reachability...

Node reachability check passed from node "vrh1"

Checking user equivalence...

User equivalence check passed for user "grid"

Checking node connectivity...

Checking hosts config file...

Verification of the hosts config file successful

Node connectivity passed for subnet "192.168.1.0" with node(s) vrh2,vrh1

TCP connectivity check passed for subnet "192.168.1.0"

Node connectivity passed for subnet "192.168.2.0" with node(s) vrh2,vrh1

TCP connectivity check passed for subnet "192.168.2.0"

Node connectivity passed for subnet "169.254.0.0" with node(s) vrh2,vrh1

TCP connectivity check passed for subnet "169.254.0.0"

Interfaces found on subnet "192.168.1.0" that are likely candidates for VIP are:

vrh2 eth0:192.168.1.163 eth0:192.168.1.164 eth0:192.168.1.166

vrh1 eth0:192.168.1.161 eth0:192.168.1.190 eth0:192.168.1.162

Interfaces found on subnet "169.254.0.0" that are likely candidates for VIP are:

vrh2 eth1:169.254.8.92

vrh1 eth1:169.254.175.195

Interfaces found on subnet "192.168.2.0" that are likely candidates for a private interconnect are:

vrh2 eth1:192.168.2.19

vrh1 eth1:192.168.2.18

Node connectivity check passed

Checking ASMLib configuration.

Check for ASMLib configuration passed.

Total memory check passed

Available memory check passed

Swap space check passed

Free disk space check passed for "vrh2:/tmp"

Free disk space check passed for "vrh1:/tmp"

Check for multiple users with UID value 54322 passed

User existence check passed for "grid"

Group existence check passed for "oinstall"

Group existence check passed for "dba"

Membership check for user "grid" in group "oinstall" [as Primary] failed

Check failed on nodes:

vrh2,vrh1

Membership check for user "grid" in group "dba" failed

Check failed on nodes:

vrh2,vrh1

Run level check passed

Hard limits check passed for "maximum open file descriptors"

Soft limits check passed for "maximum open file descriptors"

Hard limits check passed for "maximum user processes"

Soft limits check passed for "maximum user processes"

System architecture check passed

Kernel version check passed

Kernel parameter check passed for "semmsl"

Kernel parameter check passed for "semmns"

Kernel parameter check passed for "semopm"

Kernel parameter check passed for "semmni"

Kernel parameter check passed for "shmmax"

Kernel parameter check passed for "shmmni"

Kernel parameter check passed for "shmall"

Kernel parameter check passed for "file-max"

Kernel parameter check passed for "ip_local_port_range"

Kernel parameter check passed for "rmem_default"

Kernel parameter check passed for "rmem_max"

Kernel parameter check passed for "wmem_default"

Kernel parameter check passed for "wmem_max"

Kernel parameter check passed for "aio-max-nr"

Package existence check passed for "make-3.81( x86_64)"

Package existence check passed for "binutils-2.17.50.0.6( x86_64)"

Package existence check passed for "gcc-4.1.2 (x86_64)( x86_64)"

Package existence check passed for "libaio-0.3.106 (x86_64)( x86_64)"

Package existence check passed for "glibc-2.5-24 (x86_64)( x86_64)"

Package existence check passed for "compat-libstdc++-33-3.2.3 (x86_64)( x86_64)"

Package existence check passed for "elfutils-libelf-0.125 (x86_64)( x86_64)"

Package existence check passed for "elfutils-libelf-devel-0.125( x86_64)"

Package existence check passed for "glibc-common-2.5( x86_64)"

Package existence check passed for "glibc-devel-2.5 (x86_64)( x86_64)"

Package existence check passed for "glibc-headers-2.5( x86_64)"

Package existence check passed for "gcc-c++-4.1.2 (x86_64)( x86_64)"

Package existence check passed for "libaio-devel-0.3.106 (x86_64)( x86_64)"

Package existence check passed for "libgcc-4.1.2 (x86_64)( x86_64)"

Package existence check passed for "libstdc++-4.1.2 (x86_64)( x86_64)"

Package existence check passed for "libstdc++-devel-4.1.2 (x86_64)( x86_64)"

Package existence check passed for "sysstat-7.0.2( x86_64)"

Package existence check passed for "ksh-20060214( x86_64)"

Check for multiple users with UID value 0 passed

Current group ID check passed

Starting Clock synchronization checks using Network Time Protocol(NTP)...

NTP Configuration file check started...

No NTP Daemons or Services were found to be running

Clock synchronization check using Network Time Protocol(NTP) passed

Core file name pattern consistency check passed.

User "grid" is not part of "root" group. Check passed

Default user file creation mask check passed

Checking consistency of file "/etc/resolv.conf" across nodes

File "/etc/resolv.conf" does not have both domain and search entries defined

domain entry in file "/etc/resolv.conf" is consistent across nodes

search entry in file "/etc/resolv.conf" is consistent across nodes

All nodes have one search entry defined in file "/etc/resolv.conf"

The DNS response time for an unreachable node is within acceptable limit on all nodes

File "/etc/resolv.conf" is consistent across nodes

Time zone consistency check passed

Starting check for Huge Pages Existence ...

Check for Huge Pages Existence passed

Starting check for Hardware Clock synchronization at shutdown ...

Check for Hardware Clock synchronization at shutdown passed

Pre-check for cluster services setup was unsuccessful on all the nodes.

Database Installation Ready check on all nodes:安装RDBMS前执行以下命令

cluvfy stage -pre dbinst -n vrh1,vrh2

cluvfy stage -pre dbinst -n vrh1,vrh2

Performing pre-checks for database installation

Checking node reachability...

Node reachability check passed from node "vrh1"

Checking user equivalence...

User equivalence check passed for user "grid"

Checking node connectivity...

Checking hosts config file...

Verification of the hosts config file successful

Check: Node connectivity for interface "eth0"

Node connectivity passed for interface "eth0"

Check: Node connectivity for interface "eth1"

Node connectivity passed for interface "eth1"

Node connectivity check passed

Total memory check passed

Available memory check passed

Swap space check passed

Free disk space check passed for "vrh2:/tmp"

Free disk space check passed for "vrh1:/tmp"

Check for multiple users with UID value 54322 passed

User existence check passed for "grid"

Group existence check passed for "asmadmin"

Group existence check passed for "dba"

Membership check for user "grid" in group "asmadmin" [as Primary] passed

Membership check for user "grid" in group "dba" failed

Check failed on nodes:

vrh2,vrh1

Run level check passed

Hard limits check passed for "maximum open file descriptors"

Soft limits check passed for "maximum open file descriptors"

Hard limits check passed for "maximum user processes"

Soft limits check passed for "maximum user processes"

System architecture check passed

Kernel version check passed

Kernel parameter check passed for "semmsl"

Kernel parameter check passed for "semmns"

Kernel parameter check passed for "semopm"

Kernel parameter check passed for "semmni"

Kernel parameter check passed for "shmmax"

Kernel parameter check passed for "shmmni"

Kernel parameter check passed for "shmall"

Kernel parameter check passed for "file-max"

Kernel parameter check passed for "ip_local_port_range"

Kernel parameter check passed for "rmem_default"

Kernel parameter check passed for "rmem_max"

Kernel parameter check passed for "wmem_default"

Kernel parameter check passed for "wmem_max"

Kernel parameter check passed for "aio-max-nr"

Package existence check passed for "make-3.81( x86_64)"

Package existence check passed for "binutils-2.17.50.0.6( x86_64)"

Package existence check passed for "gcc-4.1.2 (x86_64)( x86_64)"

Package existence check passed for "libaio-0.3.106 (x86_64)( x86_64)"

Package existence check passed for "glibc-2.5-24 (x86_64)( x86_64)"

Package existence check passed for "compat-libstdc++-33-3.2.3 (x86_64)( x86_64)"

Package existence check passed for "elfutils-libelf-0.125 (x86_64)( x86_64)"

Package existence check passed for "elfutils-libelf-devel-0.125( x86_64)"

Package existence check passed for "glibc-common-2.5( x86_64)"

Package existence check passed for "glibc-devel-2.5 (x86_64)( x86_64)"

Package existence check passed for "glibc-headers-2.5( x86_64)"

Package existence check passed for "gcc-c++-4.1.2 (x86_64)( x86_64)"

Package existence check passed for "libaio-devel-0.3.106 (x86_64)( x86_64)"

Package existence check passed for "libgcc-4.1.2 (x86_64)( x86_64)"

Package existence check passed for "libstdc++-4.1.2 (x86_64)( x86_64)"

Package existence check passed for "libstdc++-devel-4.1.2 (x86_64)( x86_64)"

Package existence check passed for "sysstat-7.0.2( x86_64)"

Package existence check passed for "ksh-20060214( x86_64)"

Check for multiple users with UID value 0 passed

Current group ID check passed

Default user file creation mask check passed

Checking CRS integrity...

CRS integrity check passed

Checking Cluster manager integrity...

Checking CSS daemon...

Oracle Cluster Synchronization Services appear to be online.

Cluster manager integrity check passed

Checking node application existence...

Checking existence of VIP node application (required)

VIP node application check passed

Checking existence of NETWORK node application (required)

NETWORK node application check passed

Checking existence of GSD node application (optional)

GSD node application is offline on nodes "vrh2,vrh1"

Checking existence of ONS node application (optional)

ONS node application check passed

Checking if Clusterware is installed on all nodes...

Check of Clusterware install passed

Checking if CTSS Resource is running on all nodes...

CTSS resource check passed

Querying CTSS for time offset on all nodes...

Query of CTSS for time offset passed

Check CTSS state started...

CTSS is in Active state. Proceeding with check of clock time offsets on all nodes...

Check of clock time offsets passed

Oracle Cluster Time Synchronization Services check passed

Checking consistency of file "/etc/resolv.conf" across nodes

File "/etc/resolv.conf" does not have both domain and search entries defined

domain entry in file "/etc/resolv.conf" is consistent across nodes

search entry in file "/etc/resolv.conf" is consistent across nodes

All nodes have one search entry defined in file "/etc/resolv.conf"

The DNS response time for an unreachable node is within acceptable limit on all nodes

File "/etc/resolv.conf" is consistent across nodes

Time zone consistency check passed

Checking VIP configuration.

Checking VIP Subnet configuration.

Check for VIP Subnet configuration passed.

Checking VIP reachability

Check for VIP reachability passed.

Pre-check for database installation was unsuccessful on all the nodes.

利用cluvfy检验ocr组件:

cluvfy comp ocr

cluvfy comp ocr

Verifying OCR integrity

Checking OCR integrity...

Checking the absence of a non-clustered configuration...

All nodes free of non-clustered, local-only configurations

ASM Running check passed. ASM is running on all specified nodes

Checking OCR config file "/etc/oracle/ocr.loc"...

OCR config file "/etc/oracle/ocr.loc" check successful

Disk group for ocr location "+SYSTEMDG" available on all the nodes

Disk group for ocr location "+FRA" available on all the nodes

Disk group for ocr location "+DATA" available on all the nodes

NOTE:

This check does not verify the integrity of the OCR contents.

Execute 'ocrcheck' as a privileged user to verify the contents of OCR.

OCR integrity check passed

Verification of OCR integrity was successful.

我们会在CRS和RAC数据库的安装过程中具体使用Cluster Verify Utility的不同"stage",各种不同的stage可以使用cluvfy stage -list命令列出:

我们会在CRS和RAC数据库的安装过程中具体使用Cluster Verify Utility的不同"stage",各种不同的stage可以使用cluvfy stage -list命令列出: